Building Smart Chat History Summarization in LarAgent with AI

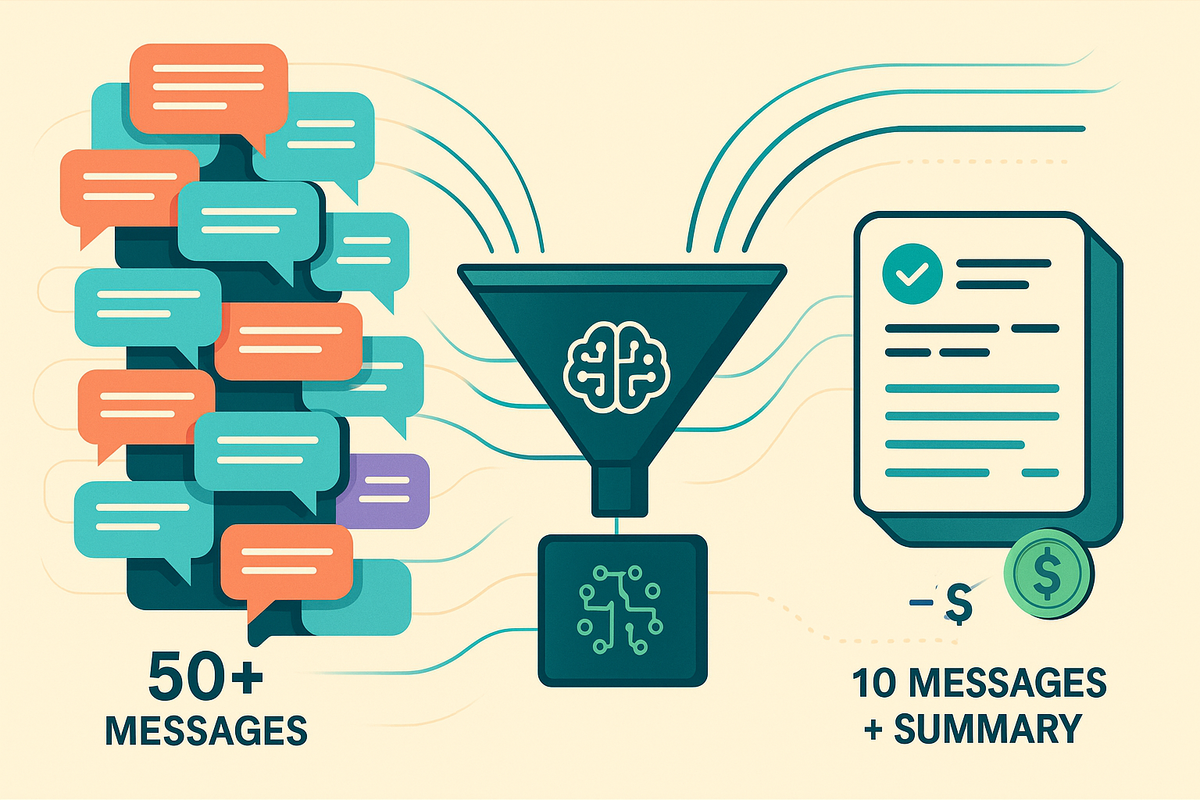

Ever watched your AI agent's token costs climb as conversations grow longer?

Here's the thing: AI agents need context to be useful. But when your chat history balloons past 50, 100, or even 200 messages, you're burning through tokens like crazy, slowing down responses, and risking context overflow.

It's like trying to remember an entire book every time someone asks you a simple question.

The good news? You don't need to throw away your conversation history. You just need to be smarter about it.

In this tutorial, I'll show you how to build an automatic chat history summarization system in LarAgent.

When your conversations hit 50+ messages, the system creates a detailed summary, stores it safely, and feeds it back to your agent as concise context.

You keep the conversation quality, slash your token costs, and never worry about context limits again.

Let's dive in.

Understanding the Summarization Strategy

Before we start coding, let's map out what we're building. The strategy is simpler than you might think.

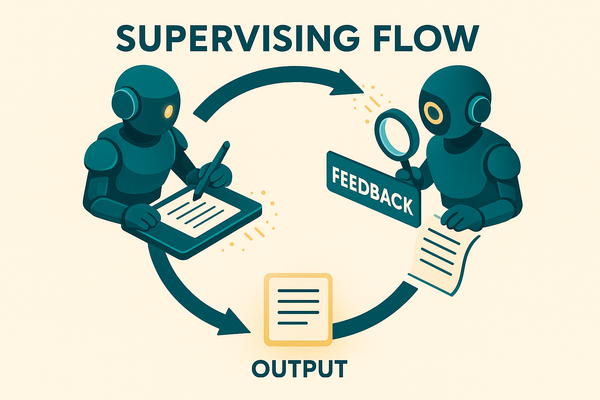

Here's the flow:

- Monitor - Watch the message count in your chat history

- Trigger - When we hit 50 messages, kick off summarization

- Summarize - Use an AI agent to create a detailed summary of the conversation

- Store - Save that summary in your database

- Clean - Keep only the last 10-15 messages in the active history

- Inject - On the next interaction, slip the summary into the agent's instructions

One more thing: we'll do all this asynchronously using Laravel queues. Your users won't wait around while the summarization happens. They get their response instantly, and the cleanup happens in the background.

Let's build it.

Instructions below is written at v0.8, some approaches may be changed, check LarAgent docs for more information

Setting Up the Project

I'm assuming you've got Laravel up and running with LarAgent already installed. If not, check out the LarAgent quickstart first.

Create the Database Migration

We need a place to store our summaries. Run this:

php artisan make:migration create_chat_summaries_table

Open the migration file and add this structure:

<?php

use Illuminate\Database\Migrations\Migration;

use Illuminate\Database\Schema\Blueprint;

use Illuminate\Support\Facades\Schema;

return new class extends Migration

{

public function up(): void

{

Schema::create('chat_summaries', function (Blueprint $table) {

$table->id();

$table->string('chat_history_id')->index();

$table->text('summary_text');

$table->integer('message_count');

$table->json('metadata')->nullable();

$table->timestamps();

});

}

public function down(): void

{

Schema::dropIfExists('chat_summaries');

}

};

The chat_history_id will match your LarAgent chat identifier, message_count helps us track when we last summarized, and metadata is there for any extra info you want to store later (like token counts or summary quality scores).

Run the migration:

php artisan migrate

Create the Eloquent Model

Generate the model:

php artisan make:model ChatSummary

And fill it in:

<?php

namespace App\Models;

use Illuminate\Database\Eloquent\Model;

class ChatSummary extends Model

{

protected $fillable = [

'chat_history_id',

'summary_text',

'message_count',

'metadata',

];

protected $casts = [

'metadata' => 'array',

];

}

Simple and clean. Now we can store and retrieve summaries easily.

Creating the Summary Manager Service

This is the heart of our system: a service class that handles all the summarization logic. Create it manually in app/Services/ChatSummaryManager.php:

<?php

namespace App\Services;

use App\Models\ChatSummary;

use App\AiAgents\ChatSummarizerAgent;

class ChatSummaryManager

{

protected int $threshold = 50;

protected int $keepRecentMessages = 10;

public function shouldSummarize(string $chatHistoryId, int $currentCount): bool

{

// Don't summarize if below threshold

if ($currentCount < $this->threshold) {

return false;

}

// Check if we already have a recent summary

$lastSummary = ChatSummary::where('chat_history_id', $chatHistoryId)

->latest()

->first();

// If no summary exists, definitely summarize

if (!$lastSummary) {

return true;

}

// Only re-summarize if we've added significant new messages

return $currentCount >= ($lastSummary->message_count + 20);

}

public function processAndSummarizeFromMessages(array $messages, string $chatHistoryId): void

{

if (count($messages) < $this->threshold) {

return;

}

// Format messages for summarization

$formattedMessages = $this->formatMessages($messages);

// Generate summary using AI

$summaryText = $this->generateSummary($formattedMessages);

// Store the summary

ChatSummary::create([

'chat_history_id' => $chatHistoryId,

'summary_text' => $summaryText,

'message_count' => count($messages),

'metadata' => [

'generated_at' => now()->toIso8601String(),

'total_messages' => count($messages),

],

]);

}

protected function formatMessages(array $messages): string

{

$formatted = "Conversation History:\n\n";

foreach ($messages as $message) {

$role = ucfirst($message->getRole());

$content = $message->getContent();

// Skip tool calls and results for cleaner summary

if (in_array($role, ['Tool', 'Tool_result'])) {

continue;

}

$formatted .= "[$role]: $content\n\n";

}

return $formatted;

}

protected function generateSummary(string $conversationText): string

{

// Use the summarizer agent with in_memory history (no persistence needed)

$summary = ChatSummarizerAgent::make()

->message($conversationText)

->respond();

return $summary;

}

}

This service does the heavy lifting:

shouldSummarize()checks if we need to create a summaryprocessAndSummarizeFromMessages()creates and stores the summaryformatMessages()turns message objects into readable textgenerateSummary()calls our AI summarizer

The cleanup of old messages happens in the agent's

afterSendwe will create below.

Building the Summarization Agent

Now we need an AI agent specifically designed to create quality summaries. Generate it:

php artisan make:agent ChatSummarizerAgent

Open app/AiAgents/ChatSummarizerAgent.php and configure it:

<?php

namespace App\AiAgents;

use LarAgent\Agent;

class ChatSummarizerAgent extends Agent

{

protected $model = 'gpt-5-mini';

protected $history = 'in_memory'; // No need to persist summarizer's own chats

protected $provider = 'default';

protected $temperature = 0.3; // Lower temperature for consistent, focused summaries

public function instructions()

{

return "You are an expert conversation summarizer. Your job is to create detailed, comprehensive summaries of chat conversations.

Your summary should include:

1. **Key Topics Discussed**: What were the main subjects covered?

2. **Important Decisions**: Any agreements, choices, or conclusions made

3. **User Preferences**: Revealed preferences, likes, dislikes, or constraints

4. **Questions & Answers**: Significant Q&A exchanges

5. **Action Items**: Tasks mentioned or to be completed

6. **Relevant Context**: Names, dates, locations, or other important details

7. **Conversation Flow**: How the discussion progressed and evolved

Be comprehensive but concise. Focus on information that would be valuable for continuing the conversation later. Write in a natural, narrative style that another AI can easily understand and use as context.

Do NOT include:

- Pleasantries or greetings unless they reveal something important

- Repetitive information";

}

public function prompt($message)

{

return "Analyze this conversation and create a detailed summary:\n\n$message\n\nProvide a well-structured summary covering all important aspects.";

}

}

The key here is the detailed instructions. We're telling the summarizer exactly what to look for and how to structure the summary. The lower temperature (0.3) keeps summaries consistent and factual rather than creative.

Using in_memory history is perfect here because we don't need to remember what the summarizer itself said—we just need it to process one request and return a summary.

Implementing Auto-Summarization Logic

Now we need to automatically trigger summarization when the threshold is hit. We'll use agent hooks and queue jobs to keep everything fast and non-blocking.

Create the Queue Job

Generate a job:

php artisan make:job SummarizeChatHistory

Fill it in at app/Jobs/SummarizeChatHistory.php:

<?php

namespace App\Jobs;

use App\Services\ChatSummaryManager;

use Illuminate\Bus\Queueable;

use Illuminate\Contracts\Queue\ShouldQueue;

use Illuminate\Foundation\Bus\Dispatchable;

use Illuminate\Queue\InteractsWithQueue;

use Illuminate\Queue\SerializesModels;

use Illuminate\Support\Facades\Log;

class SummarizeChatHistory implements ShouldQueue

{

use Dispatchable, InteractsWithQueue, Queueable, SerializesModels;

public function __construct(

public array $messages,

public string $chatHistoryId

) {}

public function handle(): void

{

try {

$manager = new ChatSummaryManager();

$manager->processAndSummarizeFromMessages(

$this->messages,

$this->chatHistoryId

);

Log::info("Chat history summarized", [

'chat_id' => $this->chatHistoryId,

]);

} catch (\Exception $e) {

Log::error("Failed to summarize chat history", [

'chat_id' => $this->chatHistoryId,

'error' => $e->getMessage(),

]);

// Optionally retry

throw $e;

}

}

}

Add Hook to Your Agent

We'll use the agent's built-in afterSend hook. Create your CustomerSupportAgent:

<?php

namespace App\AiAgents;

use App\Models\ChatSummary;

use App\Services\ChatSummaryManager;

use App\Jobs\SummarizeChatHistory;

use LarAgent\Agent;

use LarAgent\Messages\DeveloperMessage;

class CustomerSupportAgent extends Agent

{

protected $model = 'gpt-5';

protected $history = 'file';

protected $provider = 'default';

public function instructions()

{

return "You are a helpful customer support agent. Assist users with their questions and issues professionally.";

}

protected function onInitialize()

{

// Check for existing summary and inject as DeveloperMessage

$summary = $this->getConversationSummary();

if ($summary) {

$devMsg = new DeveloperMessage(

"--- Previous Conversation Summary ---\n\n" .

$summary .

"\n\nUse this summary to maintain context from earlier in the conversation. The recent messages show the current discussion."

);

$this->chatHistory()->addMessage($devMsg);

}

}

protected function afterSend($history, $message)

{

$messageCount = $history->count();

$chatId = $history->getIdentifier();

$manager = new ChatSummaryManager();

if ($manager->shouldSummarize($chatId, $messageCount)) {

// Pass messages as array - they serialize fine for the queue

$messages = $history->toArray();

SummarizeChatHistory::dispatch($messages, $chatId);

// Clean up old messages immediately (fast operation)

$this->cleanupOldMessages($history);

}

return true;

}

protected function cleanupOldMessages($history): void

{

$messages = $history->getMessages();

$keepRecent = 10; // Keep last 10 messages

// Keep only the most recent messages

$recentMessages = array_slice($messages, -$keepRecent);

// Clear and repopulate with recent messages

$history->setMessages([]);

foreach ($recentMessages as $message) {

$history->addMessage($message);

}

// Save to storage

$history->writeToMemory();

}

protected function getConversationSummary(): ?string

{

// Access chat history directly from the agent

$chatId = $this->chatHistory()->getIdentifier();

$summary = ChatSummary::where('chat_history_id', $chatId)

->latest()

->first();

return $summary?->summary_text;

}

public function prompt($message)

{

return $message;

}

}

Now here's what happens:

- User sends a message to your agent

- Agent responds

- The

afterSendhook runs automatically - It checks the message count

- If threshold is met, it dispatches a background job for summarization

- It immediately cleans up old messages (keeps last 10)

- Job processes summarization in the background without blocking the user

- Summary gets stored in the database

- Summary get picked up automatically when agent class inits

Beautiful, right? The user never waits for summarization to happen. Message cleanup is instant, and the heavy AI summarization work happens in the background. Everything is contained within your agent class.

Testing the Implementation

Let's make sure everything works. We'll simulate a long conversation and verify the summarization kicks in.

Manual Testing via Artisan

The quickest way to test is using LarAgent's built-in chat command:

php artisan agent:chat CustomerSupportAgent --chat=test-summary

Now have a conversation with at least 50 messages. I know, that's tedious. Here's a shortcut:

Create a Test Seeder

Make a seeder to populate test data:

php artisan make:seeder ChatHistoryTestSeeder

Fill it in:

<?php

namespace Database\Seeders;

use App\AiAgents\CustomerSupportAgent;

use Illuminate\Database\Seeder;

use LarAgent\Message;

class ChatHistoryTestSeeder extends Seeder

{

public function run(): void

{

$agent = CustomerSupportAgent::for('test-summary');

$history = $agent->chatHistory();

// Create 60 test messages

for ($i = 1; $i <= 60; $i++) {

if ($i % 2 === 1) {

// User message

$history->addMessage(

Message::user("This is test user message number $i. I have a question about your product.")

);

} else {

// Assistant message

$history->addMessage(

Message::assistant("Thank you for your question. Here's my response to message $i. How else can I help?")

);

}

}

// Save to storage

$history->writeToMemory();

$this->command->info('Created 60 test messages for chat: test-summary');

}

}

Run it:

php artisan db:seed --class=ChatHistoryTestSeeder

Trigger Summarization

Now send one more message through your agent:

php artisan agent:chat CustomerSupportAgent --chat=test-summary

This should trigger the AfterSend event, which checks the count (61 messages), sees it exceeds 50, and dispatches the summarization job.

Check the Results

Make sure your queue worker is running:

php artisan queue:work

Then check your database:

php artisan tinker

>>> App\Models\ChatSummary::all();

You should see a summary record with your chat_history_id.

Verify Summary Injection

Send another message and check if the summary appears in the agent's instructions:

$agent = CustomerSupportAgent::for('test-summary');

$response = $agent->respond('Can you remember what we discussed earlier?');

The agent should reference information from the summary in its response, proving it has context from the original 60 messages even though only 10 are in active history.

Debug Tips

If something's not working:

- Check

storage/logs/laravel.logfor errors - Verify the queue is running (

php artisan queue:work) - Check if the summary was created:

ChatSummary::where('chat_history_id', 'test-summary')->first() - Inspect the agent's instructions: Add

dd($this->instructions())in your agent class temporarily - Ask for help in community discord server

Conclusion

You've just built a production-ready chat history summarization system that'll save you money, keep your responses fast, and maintain conversation quality no matter how long your users talk to your AI agent.

Think about what you've accomplished: automatic monitoring using agent hooks, intelligent summarization with a dedicated AI agent, seamless context injection through instructions, and all of it running in the background without blocking your users. Your agent now has the memory of an elephant with the efficiency of a minimalist.

The 50-message threshold we used is just a starting point. Tune it based on your needs—maybe 25 messages for cost-sensitive apps, or 100 for complex support conversations.

Also, prompts used here may need adjustment based on your context.

Here's my question for you: How long are your typical conversations? Would you stick with message count, or would monitoring token usage work better for your use case?

Now go forth and let your agents have infinite memory, without infinite costs.

Happy coding! 🚀

Resources

- LarAgent on Github (Please, give us a ⭐)

- Laravel docs

- LarAgent docs