How to Build a Self-Refining Content Agent with LarAgent

We've all been there: you ask an AI to write a LinkedIn post, and it gives you something... okay. Not great, just okay. It's a bit generic, maybe too enthusiastic, or it misses the nuance of your brand voice.

So you rewrite the prompt. "Make it punchier." "Don't use emojis." "Sound more professional."

You're doing the work of a supervisor-reviewing, giving feedback, and asking for revisions. But what if you could automate that supervision?

What if you could build a system where one AI writes the content, and another AI reviews it against your specific rules, giving feedback until it's perfect?

That's exactly what we're going to build today.

In this tutorial, I'll show you how to create a self-refining content agent using LarAgent. We'll build a complete orchestration system where a "Writer Agent" and a "Reviewer Agent" work together in a loop to produce high-quality content that meets your exact standards-automatically.

We'll cover:

- The "Supervising Pattern" for AI workflows

- Building specialized agents for writing and reviewing

- Using LarAgent's structured output for precise feedback

- Orchestrating the feedback loop in pure Laravel code

- Optimizing costs by using cheaper models for review

By the end, you'll have a system that doesn't just generate content—it improves it.

Let's get started.

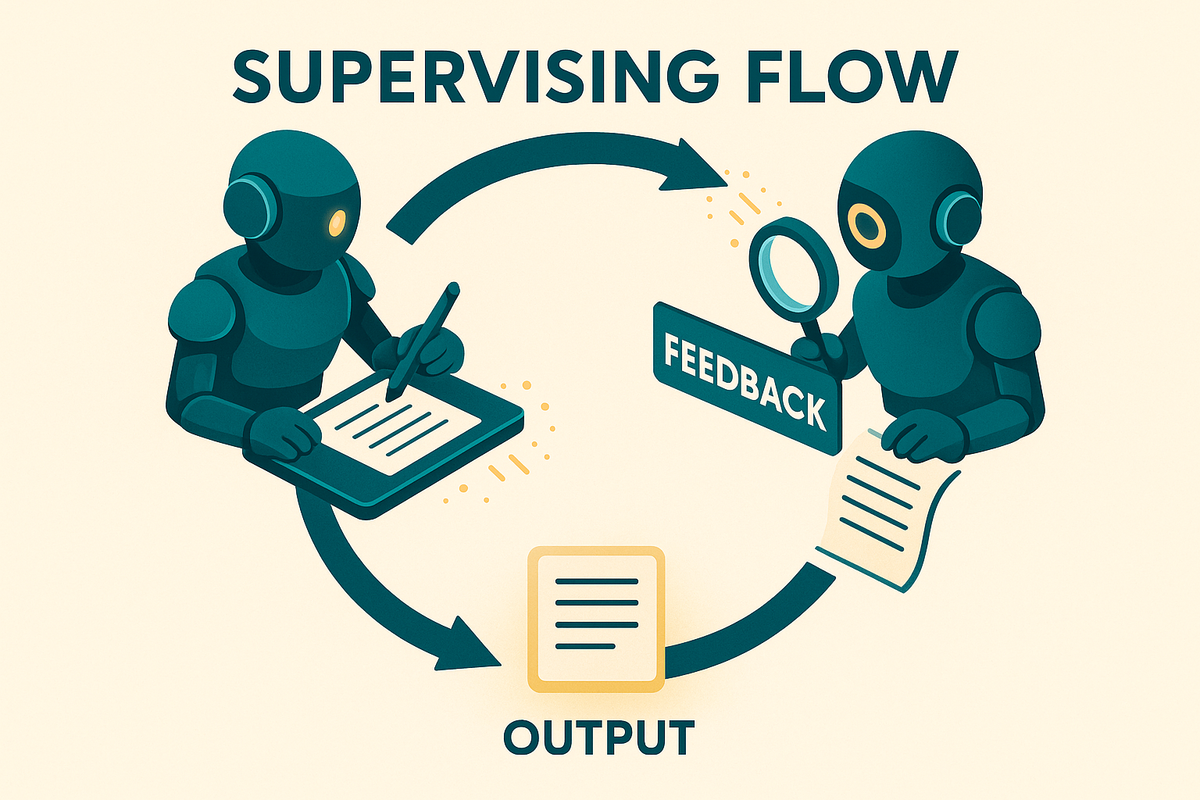

Understanding the Supervising Pattern

The concept is simple but powerful. Instead of relying on a single "one-shot" generation, we split the responsibility into two distinct roles:

- The Writer: Responsible for creativity, drafting, and implementing changes.

- The Reviewer: Responsible for quality control, checking against rules, and providing specific feedback.

This mimics a real-world editorial process. A writer drafts, an editor reviews, and the cycle repeats until the content is ready.

Why this works better

Single-agent prompts often struggle to balance creativity with strict adherence to rules. When you ask a model to "be creative but follow these 15 constraints," it often hallucinates or ignores half the rules.

By separating the concerns, you let the Writer focus on creation and the Reviewer focus on constraints. The feedback loop ensures that errors are caught and fixed automatically.

Plus, it's cost-effective. You can use a high-quality model for writing and a cheaper, faster model for reviewing (since critiquing is easier task for LLM than creating).

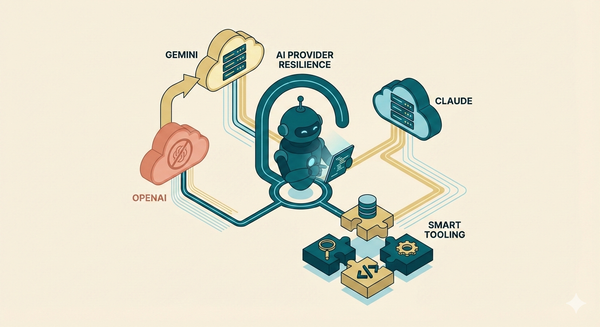

System Architecture Overview

Here's the workflow we're building:

- Input: You provide a post idea and a set of style rules.

- Draft: The Writer Agent creates an initial draft.

- Review: The Reviewer Agent evaluates the draft against your rules and assigns a score (0-100).

- Decision:

- If the score is above your threshold (e.g., 85), we're done.

- If not, the feedback is sent back to the Writer.

- Refine: The Writer generates a new version based on the feedback.

- Loop: Steps 3-5 repeat until the threshold is met or we hit a maximum iteration limit.

This entire process happens within a Laravel service class, orchestrating the two stateless agents.

Critical Insight: The quality of your output depends almost entirely on the quality of your rules. Garbage rules in, garbage content out. We'll cover how to craft killer rules later in this guide.

Building the Writer Agent

First, let's create our Writer. This agent's job is to take an idea (and optional feedback) and turn it into a polished post.

Generate the agent:

php artisan make:agent ContentWriterAgent

Now, let's configure it in app/AiAgents/ContentWriterAgent.php:

<?php

namespace App\AiAgents;

use LarAgent\Agent;

class ContentWriterAgent extends Agent

{

// Use a capable model for writing (e.g., GPT-4o)

protected $model = 'gpt-4o';

// We don't need chat history persistence for this workflow

protected $history = 'in_memory';

// Balanced temperature for creativity

protected $temperature = 0.7;

public function instructions()

{

return "You are an expert content writer specializing in high-engagement social media posts.

Your goal is to write content that is:

- Engaging and hook-driven

- Clear and concise

- Aligned with the specific style rules provided

When receiving feedback, apply it precisely to improve the content while maintaining the core message.";

}

}

Simple, right? The Writer doesn't need complex logic because its "intelligence" comes from the feedback loop we're about to build.

Building the Reviewer Agent

The Reviewer is where the magic happens. It needs to be strict, objective, and structured.

Generate the agent:

php artisan make:agent ContentReviewerAgent

Configure app/AiAgents/ContentReviewerAgent.php. Note the use of Structured Output to ensure we get machine-readable feedback.

<?php

namespace App\AiAgents;

use LarAgent\Agent;

class ContentReviewerAgent extends Agent

{

// Reviewing is easier than writing, so we can use a cheaper model

protected $model = 'gpt-4.1-mini';

protected $history = 'in_memory';

// Low temperature for consistent, objective evaluation

protected $temperature = 0.2;

public function instructions()

{

return "You are a strict content editor and quality assurance specialist.

Your job is to evaluate content against a specific set of rules.

You must be objective, critical, and precise.

Do not rewrite the content. Only provide specific, actionable feedback on what needs to change.";

}

// Define the structured output schema

protected function structuredOutput()

{

return [

'name' => 'content_evaluation',

'schema' => [

'type' => 'object',

'properties' => [

'score' => [

'type' => 'integer',

'description' => 'A score from 0 to 100 indicating how well the content follows the rules.',

],

'feedback' => [

'type' => 'array',

'items' => [

'type' => 'string',

],

'description' => 'A list of specific, actionable improvements needed. Be concise.',

],

'reasoning' => [

'type' => 'string',

'description' => 'Brief explanation of the score.',

],

],

'required' => ['score', 'feedback', 'reasoning'],

'additionalProperties' => false,

],

'strict' => true,

];

}

}

By defining structuredOutput, LarAgent will automatically parse the AI's response into a nice PHP array containing the score and feedback list. This makes our orchestration logic incredibly simple.

Creating the Orchestration Service

Now we need a conductor for our orchestra. Let's create a service class that manages the loop.

Create app/Services/ContentRefinementService.php:

<?php

namespace App\Services;

use App\AiAgents\ContentWriterAgent;

use App\AiAgents\ContentReviewerAgent;

use Illuminate\Support\Facades\Log;

class ContentRefinementService

{

protected int $maxIterations = 5;

protected int $minScore = 85;

public function refine(string $idea, string $rules): array

{

$currentPost = "";

$feedback = [];

$iteration = 1;

$history = [];

while ($iteration <= $this->maxIterations) {

// Step 1: Generate (or refine) the post

$currentPost = $this->generatePost($idea, $rules, $currentPost, $feedback);

// Step 2: Review the post

$evaluation = $this->evaluatePost($currentPost, $rules);

// Log progress

$history[] = [

'iteration' => $iteration,

'post' => $currentPost,

'score' => $evaluation['score'],

'feedback' => $evaluation['feedback'],

];

Log::info("Iteration {$iteration}: Score {$evaluation['score']}");

// Step 3: Check exit conditions

if ($evaluation['score'] >= $this->minScore) {

return [

'status' => 'success',

'final_post' => $currentPost,

'score' => $evaluation['score'],

'iterations' => $iteration,

'history' => $history

];

}

// Prepare for next loop

$feedback = $evaluation['feedback'];

$iteration++;

}

// If we hit max iterations, return the best we got

return [

'status' => 'max_iterations_reached',

'final_post' => $currentPost,

'score' => $evaluation['score'], // Score of the last iteration

'iterations' => $iteration - 1,

'history' => $history

];

}

protected function generatePost(string $idea, string $rules, string $currentPost, array $feedback): string

{

$agent = ContentWriterAgent::make();

if (empty($currentPost)) {

// First iteration: Fresh draft

$prompt = "Write a LinkedIn post based on this idea:\n\n{$idea}\n\nFollow these rules strictly:\n{$rules}";

} else {

// Subsequent iterations: Refinement

$feedbackList = implode("\n- ", $feedback);

$prompt = "Here is the previous draft:\n\n{$currentPost}\n\n" .

"Refine this post based on the following feedback:\n- {$feedbackList}\n\n" .

"Ensure you still follow the original rules:\n{$rules}\n\n" .

"Return ONLY the updated post text.";

}

return $agent->respond($prompt);

}

protected function evaluatePost(string $post, string $rules): array

{

$agent = ContentReviewerAgent::make();

$prompt = "Evaluate the following post against these rules:\n\n" .

"RULES:\n{$rules}\n\n" .

"POST:\n{$post}";

// Returns array because of structured output

return $agent->respond($prompt);

}

}

This service handles the entire lifecycle:

- It manages the state (current post, feedback, iteration count).

- It calls the Writer to generate or refine content.

- It calls the Reviewer to get a score and feedback.

- It decides whether to loop again or finish.

Running the Workflow

You can trigger this service from anywhere—a controller, a job, or an Artisan command. Let's make a quick command to test it.

php artisan make:command RefineContent

In app/Console/Commands/RefineContent.php:

<?php

namespace App\Console\Commands;

use App\Services\ContentRefinementService;

use Illuminate\Console\Command;

class RefineContent extends Command

{

protected $signature = 'content:refine';

protected $description = 'Generate and refine content using AI agents';

public function handle(ContentRefinementService $service)

{

$idea = "It's time to build AI Agents with Laravel.";

$rules = "1. Hook: Start with a controversial or surprising statement (1-2 lines).

2. Structure: Short paragraphs, use bullet points for benefits.

3. Tone: Professional but enthusiastic, avoid corporate jargon.

4. Length: Under 200 words.

5. CTA: Soft call to action at the end.";

$this->info("Starting refinement process...");

$result = $service->refine($idea, $rules);

$iterations = $result['iterations'];

$score = $result['score'];

$final_post = $result['final_post'];

$this->info("Process finished in {$iterations} iterations.");

$this->info("Final Score: {$score}/100");

$this->newLine();

$this->info("--- FINAL POST ---");

$this->line($final_post);

}

}

Run it:

php artisan content:refine

Testing and Debugging

When building this, you might run into a few common issues:

- Infinite Loops: If the Writer can't satisfy the Reviewer, they might loop forever. That's why

$maxIterationsis crucial. - Hallucinations: Sometimes the Writer ignores feedback. If this happens, try lowering the Writer's temperature (e.g., to 0.5).

- Vague Feedback: If the Reviewer says "make it better," the Writer won't know what to do. Improve your Reviewer's instructions to demand specific feedback. Sometimes, you may need to specify or give examples of feedback.

Tip - Log the feedback from each iteration. It's fascinating (and helpful) to see exactly what the Reviewer is complaining about.

Crafting Effective Rules

This is the most important section of this article. Your system is only as good as your rules.

If you give vague rules like "Write a good post," you'll get vague results. You need to be specific.

The "Reverse Engineering" Method

Here's my proven method for creating killer rules:

- Find a post you love. Find a piece of content that perfectly matches the style you want.

- Ask AI to analyze it. Paste it into ChatGPT and say: "Analyze this post's style, tone, structure, rhythm, and formatting. Describe it as a set of rules for a writer. Do NOT mention the specific topic or facts, just the form."

- Refine the output. Take that analysis and tweak it. Remove anything too specific to that one post.

- Use these as your rules.

This gives you a blueprint that captures the essence of high-quality content without copying the content itself.

Real-World Considerations

Cost Optimization

You don't need GPT-5 for everything.

- Writer: Needs intelligence. Use GPT-4o, GPT-5 or similar level LLM.

- Reviewer: Needs to follow instructions and check boxes. GPT-5-mini or even GPT-4.1-mini is often sufficient and much cheaper.

Since the Reviewer runs every iteration, using a cheaper model there saves significant money at scale.

Asynchronous Processing

For a production app, don't run this in a controller request—it takes time! Wrap the refine() call in a Queued Job.

- User submits request.

- Job runs in background (taking 30-60 seconds).

- Job saves result to database.

- User gets a notification.

Conclusion

You've just built a sophisticated AI agent workflow that mimics a human editorial process.

By separating the Writer (creation) from the Reviewer (evaluation), you've created a system that self-corrects and improves over time. It's more reliable, higher quality, and surprisingly easy to implement with LarAgent.

This pattern isn't just for LinkedIn posts. You can use it for:

- Code generation (Writer writes code, Reviewer checks for bugs).

- Email drafting (Writer drafts, Reviewer checks for tone).

- Data extraction (Writer extracts, Reviewer verifies schema).

The possibilities are endless when your agents start working together.

Still any questions? Join community here 💪

Happy coding! 🚀

Resources

- LarAgent on Github (Please, give us a ⭐)

- Laravel docs

- LarAgent docs

- LarAgent community

FAQ

How does a self-refining content agent differ from a single AI model?

A single model generates content in one shot, often missing specific constraints or quality nuances. A self-refining agent uses a feedback loop to iteratively improve the content, catching errors and refining style just like a human editor would.

What's the difference between the Writer Agent and Reviewer Agent?

The Writer Agent focuses on creativity and drafting content. The Reviewer Agent focuses on quality control, evaluating the content against strict rules and providing structured feedback. Separating these roles leads to better results than asking one Agent to do both.

How do I set up the structured output schema in LarAgent?

You can define the structuredOutput() method in your agent class, returning an array that defines the JSON schema (name, schema, strict mode). LarAgent handles the parsing automatically, returning a PHP array.

Can I use cheaper LLM models for content generation and review?

Yes! A common strategy is to use a capable model (like GPT-5) for the Writer to ensure quality generation, and a cheaper, faster model (like GPT-5-mini) for the Reviewer, as evaluation is a simpler task than creation.

Can this workflow run asynchronously in the background?

Absolutely. Since the refinement loop can take time (multiple API calls), it is highly recommended to run the orchestration service within a Laravel Queued Job to prevent request timeouts and improve user experience.