LarAgent v0.6: New Drivers, Smarter Defaults, and Fixes 🎉

Building AI agents in Laravel keeps getting smoother. With LarAgent v0.6, we’re bringing more model providers, better defaults, and bug fixes that make your development life easier.

Whether you’re plugging into OpenRouter, Claude, or Ollama—or just want a cleaner setup out of the box—this release has something for you. Let’s dive in 🚀

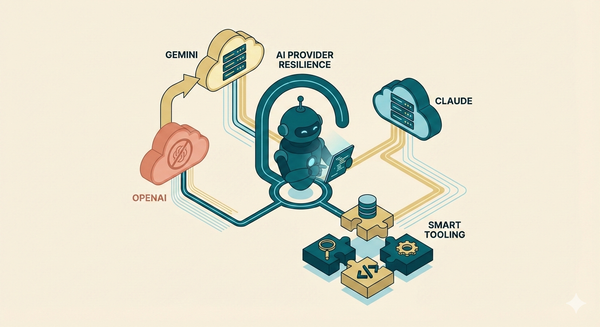

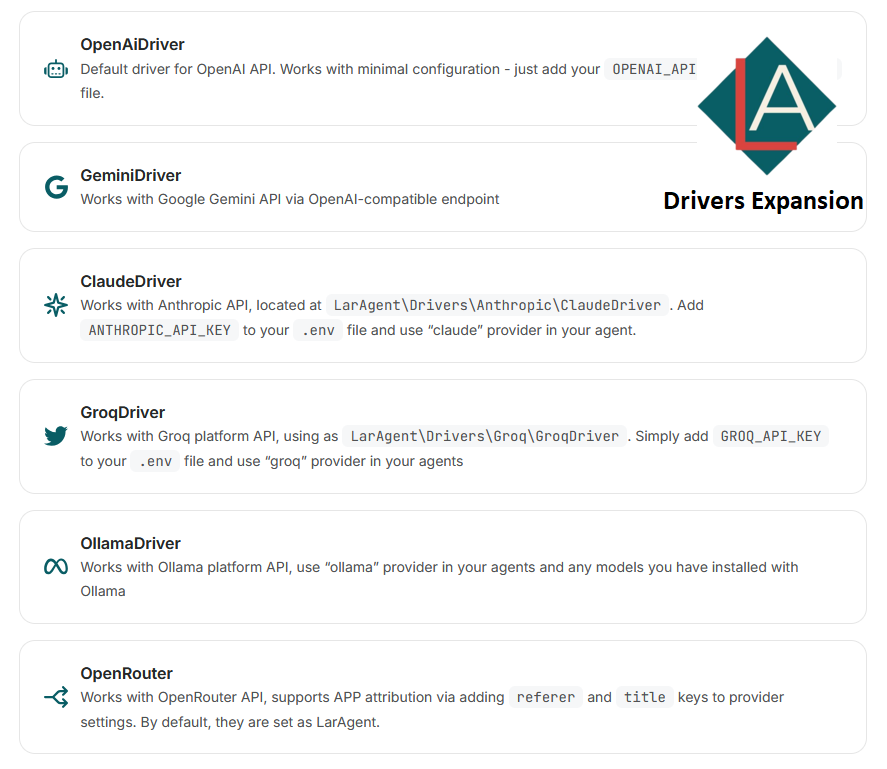

More Driver Options 🌍

You asked for it—we delivered. LarAgent now speaks with more providers, so you can run your agents wherever you like:

- OpenRouter driver – connect to OpenRouter’s unified model marketplace.

- Anthropic driver – full support for Claude models.

- Ollama driver – run models locally with Ollama.

- Groq driver improvements

This makes it easier than ever to experiment with different providers or mix and match them per project.

Smarter Defaults and Fixes ⚙️

We polished the internals so you don’t have to. Out of the box, your config now includes:

- All supported drivers are pre-configured as providers in

config/laragent.php fallback_providerset tonullby default (safer, clearer behavior)- Streaming fixed – no more

ob_flushproblem when using SSE. - Dependencies updated – keeps everything compatible and stable.

These small details make a big difference when you’re bootstrapping a new agent or debugging across environments.

Why This Matters

LarAgent’s mission is to make Laravel the easiest framework for AI agents.

With v0.6, you can:

- Experiment with cutting-edge models like Claude or local Ollama LLMs.

- Rely on OpenRouter's stability in Production

- Build APIs that stream reliably and work across clients like OpenWebUI, LibreChat, LangChain, CrewAI, and more.

New configuration file 🎯

Now, you start with 6 pre-configured providers:

/**

* Always keep provider named 'default'

* You can add more providers in array

* by copying the 'default' provider

* and changing the name and values

*

* You can remove any other providers

* which your project doesn't need

*/

'providers' => [

'default' => [

'label' => 'openai',

'api_key' => env('OPENAI_API_KEY'),

'driver' => \LarAgent\Drivers\OpenAi\OpenAiDriver::class,

'default_context_window' => 50000,

'default_max_completion_tokens' => 10000,

'default_temperature' => 1,

],

'gemini' => [

'label' => 'gemini',

'api_key' => env('GEMINI_API_KEY'),

'driver' => \LarAgent\Drivers\OpenAi\GeminiDriver::class,

'default_context_window' => 1000000,

'default_max_completion_tokens' => 10000,

'default_temperature' => 1,

],

'groq' => [

'label' => 'groq',

'api_key' => env('GROQ_API_KEY'),

'driver' => \LarAgent\Drivers\Groq\GroqDriver::class,

'default_context_window' => 131072,

'default_max_completion_tokens' => 131072,

'default_temperature' => 1,

],

'claude' => [

'label' => 'claude',

'api_key' => env('ANTHROPIC_API_KEY'),

'model' => 'claude-3-7-sonnet-latest',

'driver' => \LarAgent\Drivers\Anthropic\ClaudeDriver::class,

'default_context_window' => 200000,

'default_max_completion_tokens' => 8192,

'default_temperature' => 1,

],

'openrouter' => [

'label' => 'openrouter',

'api_key' => env('OPENROUTER_API_KEY'),

'model' => 'openai/gpt-oss-20b:free',

'driver' => \LarAgent\Drivers\OpenAi\OpenRouter::class,

'default_context_window' => 200000,

'default_max_completion_tokens' => 8192,

'default_temperature' => 1,

],

/**

* Assumes you have ollama server running with default settings

* Where URL is http://localhost:11434/v1 and no api_key

* If you have ollama server running with custom settings

* You can set api_key and api_url in the provider below

*/

'ollama' => [

'label' => 'ollama',

'driver' => \LarAgent\Drivers\OpenAi\OllamaDriver::class,

'default_context_window' => 131072,

'default_max_completion_tokens' => 131072,

'default_temperature' => 0.8,

],

],Join the Community 🌟

LarAgent is open-source and growing fast. Here’s how you can get involved:

- ⭐ Star us on GitHub: github.com/maestroerror/laragent

- 🛠️ Contribute: PRs are welcome, whether it’s drivers, docs, or fixes.

- 💬 Join Discord: discord.gg/NAczq2T9F8

Let's make Laravel the most productive framework for AI Development 💪

Final Thoughts 💡

With more providers, smarter defaults, and smoother streaming, you can focus on what matters: building amazing AI features into your Laravel apps.

Happy coding! 🚀