Laravel AI Tutorial: Create Local Agents with Ollama

Learn how to develop an AI Agents cost-effective in Laravel using LarAgent and local LLMs via Ollama. No OpenAI API keys needed for development!

Building AI-powered agents is an exciting frontier in modern web development, but there's a catch: cloud-based LLMs like OpenAI's GPT models can get expensive fast—especially during development when you're constantly refining prompts, debugging tools, and running test conversations.

This tutorial introduces a cost-effective, flexible, and powerful solution: building an AI assistant using the LarAgent package in a Laravel application, powered by local LLMs via Ollama.

The best part? It all runs locally—no OpenAI API key, no token limits, just pure development freedom.

If you prefer to watch, here is a video tutorial:

Check resources section below for Github repo 👇

What You'll Build

By the end of this tutorial, you'll have a working AI appointment booking assistant for a dental clinic that can:

- Recommend services

- Check availability

- Book appointments

- Transfer conversations to a human manager

And all of this is powered by a local LLM (llama3.2:3b) running through Ollama.

This guide assumes you already have some experience with Laravel.

Why Local LLMs via Ollama?

Using Ollama allows you to run large language models (LLMs) directly on your machine. After some testing, I choose the llama3.2:3b model, a compact and efficient 3B-parameter model that:

- Runs on most developer machines (~2GB RAM)

- Follows instructions well

- Supports function calling/tool use, which is essential for real-world AI agents

Don’t have Ollama installed? Check Ollama installation guide for Windows & Docker

If you have, just run this command to install the model:

ollama run llama3.2:3bSubscribe to LarAgent blog and keep an eye on AI Agent development in Laravel

Step-by-Step Breakdown

Laravel Project Setup

Start by creating a new Laravel project:

composer create-project laravel/laravel dentist-appointments-agentSet up your models for Service and Slot. (Links below points

- Service Model: Holds details like name, description, and price.

- Slot Model: Stores appointment times and links to services.

- Use Laravel Enums for SlotStatus (

available,booked).

Seed the database with sample data for services and slots over the next 7 days

Install & Configure LarAgent

Install the package:

composer require maestroerror/laragent

php artisan vendor:publish --tag="laragent-config"Then, in config/laragent.php, define a new provider for Ollama:

'providers' => [

// ...

'ollama' => [

'name' => 'ollama-local',

'model' => 'llama3.2:3b',

'driver' => \LarAgent\Drivers\OpenAi\OpenAiCompatible::class,

'api_key' => "ollama",

'api_url' => "http://localhost:11434/v1",

'default_context_window' => 50000,

'default_max_completion_tokens' => 100,

'default_temperature' => 1,

],

],Create the Appointment Agent

Generate the agent:

php artisan make:agent AppointmentAssistantThis creates App\AIAgents\AppointmentAssistant.php. Configure it to:

- Use

file-based history (easier for debugging) - Load instructions and prompt from Blade views

<?php

namespace App\AiAgents;

use LarAgent\Agent;

use App\Models\Service;

use App\Models\Slot;

use App\Enums\SlotStatus;

use LarAgent\Attributes\Tool;

use Illuminate\Support\Facades\Log;

class AppointmentAssistant extends Agent

{

protected $history = 'file';

protected $provider = 'ollama';

protected $tools = [];

public function instructions()

{

$services = Service::active()->get();

return view('AppointmentAssistant.instructions', ['services' => $services])->render();

}

public function prompt($message)

{

$currentDate = now()->format('Y-m-d');

return view('AppointmentAssistant.prompt', [

'message' => $message,

'currentDate' => $currentDate

])

->render();

}

}- instructions.blade.php: Describes the agent's behavior, tools, and services

- prompt.blade.php: Injects dynamic values like today's date and user message

Define AI Agent Tools

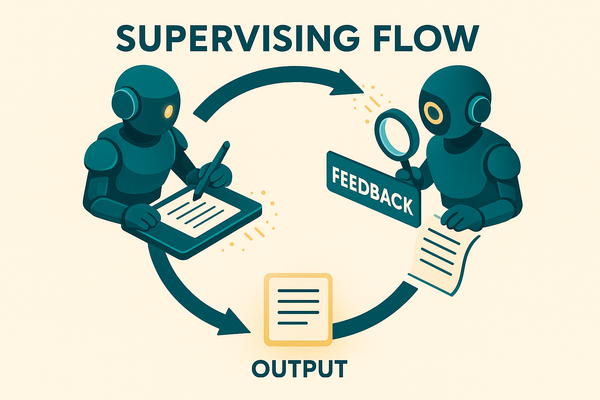

In this case, we will use the Tool attribute to create tools directly from our Agent’s class methods. Check docs for other ways of creating tools.

🛠️ Tool 1: Check Availability

Checks for open time slots based on service and date.

// ...

class AppointmentAssistant extends Agent

{

// ...

#[Tool(" Check availability for a specific service and date to find available slots and slotId.")]

public static function checkAvailability(int $serviceId, string $date) {

$service = Service::find($serviceId);

if (!$service) {

return "Service not found";

}

$slots = Slot::where('service_id', $serviceId)

->where('day', \Carbon\Carbon::parse($date))

->where('status', SlotStatus::Available)

->get();

if ($slots) {

return view('AppointmentAssistant.checkAvailability', [

'service' => $service,

'date' => $date,

'slots' => $slots

])->render();

} else {

return "No available slots for " . $service->name . " at " . $date;

}

}

// ...

}Tool 2: Book Appointment

Books an appointment if a slot is available. Add this method to the AppointmentAssistant class:

// ...

class AppointmentAssistant extends Agent

{

// ...

#[Tool("Book an appointment for a specific service, date and slotId after you have confirmed name and email with user.")]

public static function bookAppointment(int $slotId, string $name, string $email, string $date) {

$slot = Slot::find($slotId);

if (!$slot) {

return "Slot is not found, try again with correct slot ID";

}

if ($slot->status !== SlotStatus::Available) {

return "Slot is not available, check availability again";

}

$slot->status = SlotStatus::Booked;

$slot->customer_name = $name;

$slot->customer_email = $email;

$slot->save();

return "Appointment booked successfully";

}

// ...

}Tool 3: Transfer to Manager

Fallback tool in case the customer wants to speak with a human.

// ...

#[Tool("Transfer the conversation to a manager. This is a fallback tool. Use only when asked to contact human")]

public static function transferToManager(string $name, string $email, string $comment_or_extra_info) {

// Log for testing purposes, you can send email to a manager with this info

Log::info("Transfer to manager: " . $name . " - " . $email . " - " . $comment_or_extra_info);

return "Here is the contact info of manager: Angelina (+55 19 9884 88 4155)";

}

// ...Test Your Agent

Use this artisan command to start chatting:

php artisan agent:chat AppointmentAssistantThe agent can recommend services, check availability using real-time data, book appointments, and even transfer the conversation to a human.

Final Thoughts

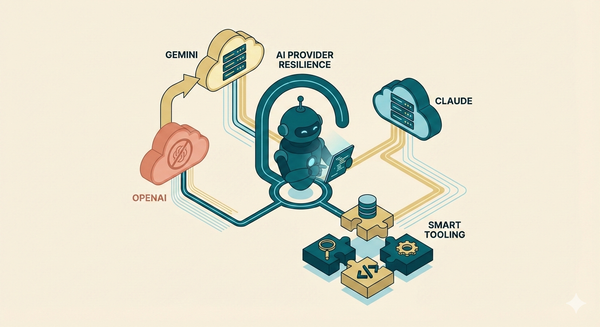

This setup is a game-changer for Laravel developers looking to build AI-powered tools. You get the freedom to iterate locally, zero token costs, and the option to switch to OpenAI or other APIs later with minimal changes.

The best part? If you made it work with llama3.2 (even if not so good) it will work great with any production-ready model such as gpt-4o-mini.

Happy coding!

✅ FAQs

Q1: Do I need a GPU to run Ollama?

No, most models (like llama3.2:3b) can run on CPU, but a GPU will speed things up.

Q2: Can I switch to GPT-4 later?

Yes! LarAgent’s driver structure makes it easy to switch providers (e.g., OpenAI) in production.

Q3: Is this setup production-ready?

It’s perfect for development. For production, use cloud-hosted models or a dedicated local server.

Q4: Can I use this for other businesses?

Absolutely. Just change the service logic and prompts—it's fully modular.

Q5: What other models can I use with Ollama?

Many! You can explore Ollama’s model zoo. Just ensure the model supports tool use.

Q6: Where’s the source code?

Check the video description, or resources below—the full project is linked on GitHub.

Resources

- Ollama

- LarAgent

- Laravel

- How to run DeepSeek locally on Windows in 3 simple steps (Includes ollama setup tutorial)

- YouTube video - Build a Smart AI Agent in Laravel Using Local LLMs (No Token Costs!)